AI-Powered Todo MCP Server(Demo)

Learn how to implement a sophisticated todo application using MCP (Model Context Protocol) for seamless AI-human interaction through natural language processing and conversational interfaces.

The Model Context Protocol (MCP) represents a paradigm shift in AI-human interaction, enabling sophisticated conversational interfaces between AI assistants and external systems. This comprehensive guide demonstrates how to architect and implement a production-ready todo management system that leverages MCP's capabilities for natural language processing, semantic understanding, and seamless API integration.

In this technical deep-dive, we'll explore the architectural patterns, implementation strategies, and best practices for building AI-powered applications that can interpret complex user intents and execute corresponding actions through conversational interfaces.

System Demonstration

Our implementation showcases the full capabilities of MCP-driven conversational interfaces. The following demonstrations illustrate the system's natural language processing capabilities, real-time state management, and seamless user experience.

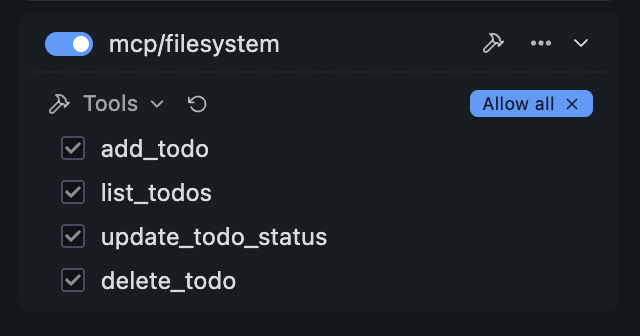

Tool Discovery and Function Enumeration

The MCP server automatically exposes available functions to the AI assistant, enabling dynamic tool discovery and capability introspection:

The LM Studio interface displays the complete function registry, including method signatures, parameter types, and documentation strings for each available operation.

Conversational Query Processing

The system demonstrates sophisticated natural language understanding, converting user intents into structured database operations:

Real-time execution of complex queries through conversational interfaces, showcasing semantic parsing and context-aware response generation.

Dynamic State Management

Advanced CRUD operations executed through natural language commands, demonstrating the system's ability to maintain data consistency and provide immediate feedback:

Seamless state transitions and transactional operations performed through conversational commands, highlighting the system's reliability and user experience optimization.

Configuration and Deployment

Step 1: LM Studio Installation and Model Setup

- Download LM Studio: Install the latest version from LM Studio

- Model Selection: Download a compatible language model (recommended: gemma3 or llama3.1)

- Performance Optimization: Configure GPU acceleration if available for improved inference speed

Step 2: MCP Server Configuration

Configure the MCP server integration by editing the mcp.json configuration file in LM Studio's Program tab:

{

"mcpServers": {

"todos": {

"command": "uv",

"args": ["--directory", "/your/path/to/mcp-server", "run", "main.py"],

"env": {

"PYTHONPATH": "/your/path/to/mcp-server",

"LOG_LEVEL": "INFO"

}

}

}

}Step 3: Server Initialization and Testing

- Server Activation: Enable the MCP server in LM Studio's interface

- Connection Verification: Verify successful tool discovery and function enumeration

- Functional Testing: Execute test commands to validate CRUD operations

- Performance Monitoring: Monitor response times and resource utilization

Step 4: Production Considerations

- Error Handling: Implement comprehensive exception management

- Logging: Configure structured logging for debugging and monitoring

- Security: Implement authentication mechanisms for production deployments

- Scalability: Consider horizontal scaling strategies for high-throughput scenarios

Source Code and Documentation

The complete implementation, including comprehensive documentation and testing suites, is available in our GitHub repository: